How ChatGPT/LLM capabilities can dramatically improve digital identity and authentication

Multi-modal, interative, prompt-based customer verification changes everything

The workflows, data, and technologies that digital services use to verify user identity and authenticate them into their digital services are inherently multi-modal exercises sequenced or orchestrated within a “customer experience” (CX). Chat-GPT and LLM capabilities have the potential to forever change customer (and employee) identity-verification (IDV) in sign-up, and sign-in workflows, during transaction and payment processing and for (identity-verifying) needs within other types of high-value digital interactions.

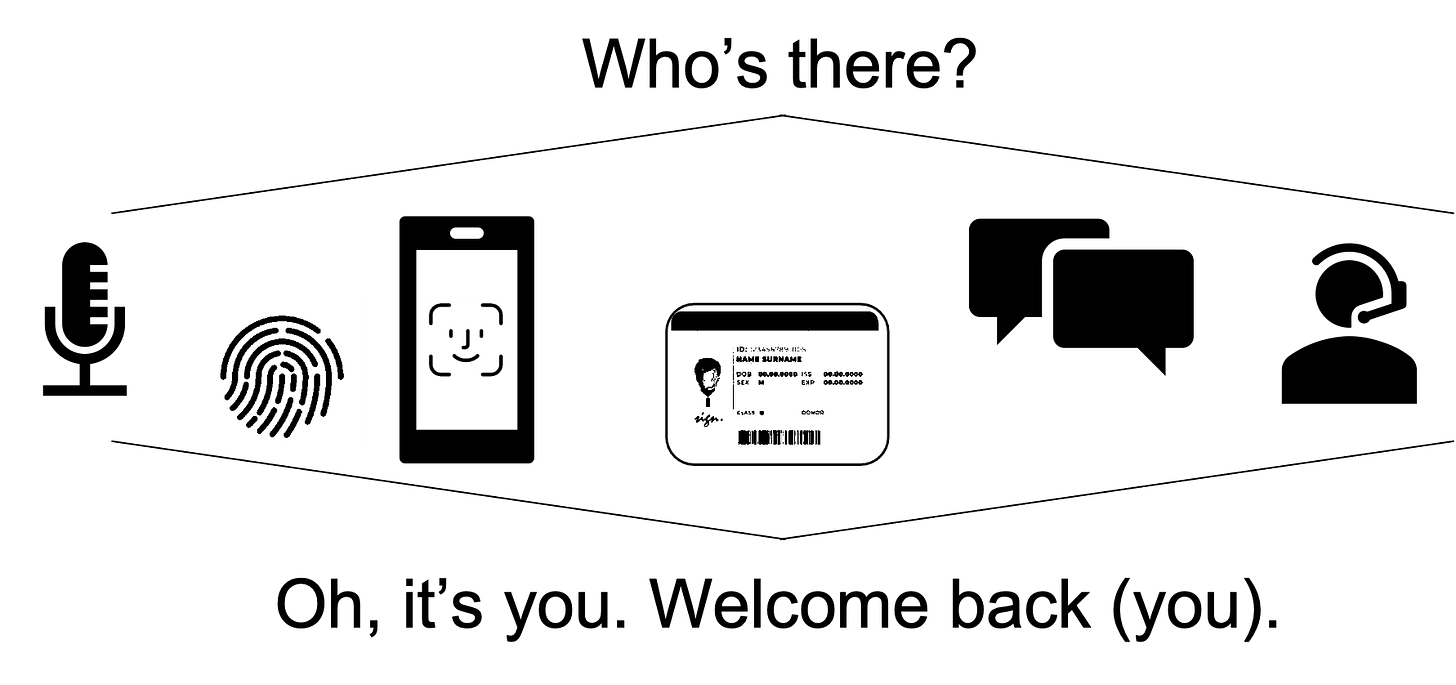

In the banking industry, for example, customers experience multiple ways to verify their identity while signing into the bank’s website, their mobile app, calling customer support, or checking into the teller’s desk at a bank’s local branch. We are all familiar with the endless ways to verify who we are through some combination of one or more methods such as knowledge-based identity (passwords, personal information, and secrets like full name, last 4 of SSN, street address, answers to personal questions) or device-based biometrics alone like face ID, touch-ID ( in combination with passkeys-based multi-factor authentication or voice ID (“my name is my identity please verify me” or even recent data from your service (“ what are the last two transactions on your credit card?”)

But the problem is that these methods are largely disconnected from one another. For privacy reasons, personally identifiable information (PII) must be protected according to each country and industry’s regulatory compliance. There are few easy ways to combine one or more IDV methods as customers move around within an enterprise, and indeed, no simple, easy way to verify customers moving between (partnered) enterprises. How frustrating is it when we call customer support, and they verify our identity and then pass us to technical support, who verify our identity again? Each business unit verifies your identity independently from the other. The iterative, conversational capabilities of Generative AI change today’s (highly repetitive) identity verification paradigm completely.

The permutations of these many methods are seemingly endless, with new emerging identity technologies available regularly. (There are over 760 authenticators, custom and native, on mobile devices now). It is no wonder customers feel like 10% or more of their day is now taken up by endless sign-ins, doing two-factor or multi-factor authentication, finding e-mails and text codes, or opening authentication apps and selecting the right code. And while customers are jumping through all of these IDV hoops, cyber-criminals are waiting, watching, and listening for any clues to customers’ account credentials being passed between devices or the customers to the servers or customer support personnel of the digital service (and vs. vs.). “Signing In” has become painful, exhausting, plus risky too.

Multi-modal capabilities of next-level generative AI like those coming from Chat-GPT, (Generative Pre-trained Transformer), Bard, Bing, and Google offer strong capabilities to reduce all of this sign-in work, pain, friction, and risk. These AI language models from the OpenAI laboratory and other labs are developing to answer questions, translate languages and generate contextually-relevant text for chatbots. They are already inter-operating between ( and learning from) large amounts of text, graphics, videos, and auditory data sources in different combinations of these multi-modal data sources.

Since generative AI lets machines “learn” from multi-modal data sources, it seems self-evident their models are perfectly suited for learning a user’s identity from multiple perspectives, too - much like how our human brains learn the identities of people in our lives. From a very young age, children experience “unsupervised” learning by watching, listening, and sensing their parents and other people in their (early) lives. Like Gen-AI, the other kind of training children receive happens later in life when instructors perform “supervised” learning to them in schools.

Whether it is good or bad for AI models to “know you” is certainly an important and valuable discussion and one worth debating at length. But the capability is certainly here today. As an innovation product professional, I can see how new types of enterprise identity and authentication platforms that offer point-and-click orchestration and sequencing of different identity-verifying methods using multi-modal data sources - will easily replace human beings and today’s static sign-in flows to verify customer identity.

Much more contextually relevant and natural-language ways of verifying customers are coming, and they will use a lot more than just today’s basic methods to verify customer identity. Knowledge data and biometrics are given. But add to these (legacy) methods newer multi-modal methods based on device behavioral and sensor data, user environmental data, or enterprise transactional data, and you can easily envision how low-friction, high-assurance, and phishing-proof methods of identifying and authenticating people on devices to digital services are just around the proverbial Chat-GPT corner. They will be faster, better, much less annoying, and much more secure. Wouldn’t it be nice to eliminate the low-level anxiety associated with account takeover risk while accessing the daily digital services we need without any 2FA friction? I can’t wait for that day to happen, and IMHO, it can’t happen soon enough.